For a few years, I’ve been using mysqli prepared statements for all php-mysql interations on all of my websites because of the inherent mysql injection protection afforded by the separation of query and data. What I have not done is harness the potential for mysqli prepared statements to enhance query speed.

Take for example the following hypothetical tables:

- A table called users contains 2 columns: userId and userName

- A table called jobs contains 2 columns: jobId and jobDescription

- A table called hires contains 2 columns: jobId and userId

We want to get a list of names for all the accountants on our payroll.

Option 1

Join everything! This is simple and easy.

SELECT `userName` FROM `users`

INNER JOIN `hires` ON `users`.`userId`=`hires`.`userId`

INNER JOIN `jobs` ON `hires`.`jobId`=`jobs`.`jobId`

WHERE `jobs`.`jobDescription`='accountant';

Unfortunately, we just scanned a table of 600 past and present employees, joined job ids onto the 500 current employees, joined 500 job descriptions to those out of our pool of 80 possible jobs and then filtered the list of 500 down to our 7 accountants!! Clearly this is very cpu intensive.

Option 2

Use a subselect. This makes writing the query much more difficult.

SELECT `users`.`username` FROM (SELECT `jobId` FROM `jobs`

WHERE `jobDescription`='accountant') AS jId

INNER JOIN `hires` ON `jId`.`jobId`=`hires`.`jobId`

INNER JOIN `users` ON `hires`.`userId`=`users`.`userId`

First the jobId for the accountant profession is selected from the jobs table. Next, the 7 accountants userIds are joined from the hires table. Finally, we join the 7 userNames corresponding to the 7 accountants. This is far more CPU efficient than option 1, but takes a lot more skill. The complexity for the developer also scales poorly with increasing numbers of tables and columns.

Option 3

We start by retrieving a list of user ids from a simple subselect:

SELECT `hires`.`userId` FROM (SELECT `jobId` FROM `jobs`

WHERE `jobs`.`jobDescription`='accountant') AS jId

INNER JOIN `hires` ON `jId`.`jobId`=`hires`.`jobId`;

Then we set up a prepared statement to look up a user’s name based on their userId and bind the variable $userId to the query.

$stmt=$db->mysqli_prepare("SELECT `userName` FROM `users` WHERE `userId`=?");

$stmt->bind_param('i',$userId);

Each time we fetch a row from our first query, we put the userId into $userId and execute the prepared statement, recycling both the database connection and the prepared statement.

Beginnings of testing with a real-world problem

In my largest website, I’ve written a database abstraction class that allows me to call a single command with 3 parameters and get a php array (or array of arrays) of data returned to me. The class already exclusively uses prepared statements to achieve separation of query and data (protecting against SQL injection attacks). This is great, but with every call to the wrapper function, a new database connection is created, a prepared statement set up, the query executed and then the connection closed. This was done to keep it simple, reliable and modular. But what would happen if I were to recycle the database connection and reuse the prepared statement?

The query that I used for this test performs a subselect based on a primary key, then joins on 4 other tables. I made sure during testing to use the SELECT SQL_NO_CACHE command to turn off mysql’s caching mechanism. I wrote a simple loop to call the wrapper function in my abstraction layer 50 times with a different primary key each time. I then created a modified version of the abstraction layer which a database connection object could be passed to, allowing it to be recycled. Finally, I pulled the prepared statement setup out of the abstraction layer and simply passed in a pre-prepared statement object to the remaining code. I cycled through the 50 executions of each variant, measuring the time taken for each query. The overall test was repeated 1000 times back to back on a single core Ubuntu VM running on my Core i7 MBP with more than a full core available for the VM to consume.

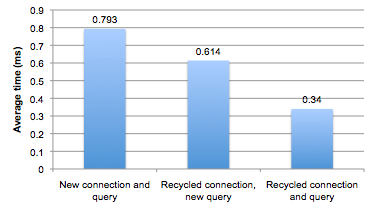

Here are the average query times:

Recycling the database connection saves 23% of the overall query time, despite being a localhost connection (and therefore very low latency). Recycling the query achieves a further 45% improvement on the recycled connection and requires less than half the time needed to connect and re-prepare the query every time.

This study only looked at executing the same statement. While situations where we need to repeatedly query based on user data e.g. to avoid one or more ORs in the WHERE clause, more testing is needed to determine whether it is faster to JOIN onto a subselect or to retrieve data from other tables via repeated executions of a prepared statement. Clearly the prepared statement route is faster if we don’t subselect but it could represent a false economy when comparing it to the option of using JOINs on a subselected initial query.